1 Overview

The Dynamic Learning Maps® (DLM®) Alternate Assessment System assesses student achievement in English language arts (ELA), mathematics, and science for students with the most significant cognitive disabilities in grades 3–8 and high school. The purpose of the system is to improve academic experiences and outcomes for students with the most significant cognitive disabilities by setting high and actionable academic expectations and providing appropriate and effective supports to educators. Results from the DLM alternate assessment are intended to support interpretations about what students know and are able to do and to support inferences about student achievement in the given subject. Results provide information that can guide instructional decisions as well as information for use with state accountability programs.

The DLM System is developed and administered by Accessible Teaching, Learning, and Assessment Systems (ATLAS), a research center within the University of Kansas’s Achievement and Assessment Institute. The DLM System is based on the core belief that all students should have access to challenging, grade-level content. Online DLM assessments give students with the most significant cognitive disabilities opportunities to demonstrate what they know in ways that traditional paper-and-pencil assessments cannot.

A complete technical manual was created after the first operational administration of ELA and mathematics in 2014–2015. Because the DLM System adopts a continuous improvement model, each year incremental changes are implemented and additional technical evidence is collected, which are described in annual technical manual updates. The 2015–2016 to 2020–2021 technical manual updates summarize only the new information and evidence for that year and refer the reader to the 2014–2015 version for complete descriptions of the system. Example DLM System changes across years include updates to the online test delivery engine, item banks, and administration procedures.

The 2021–2022 manual is a complete technical manual that provides comprehensive information and evidence for the ELA and mathematics assessment system. The manual provides a full description of the current DLM System, but also describes the more substantive changes made to the system over time. To help orient readers to this manual, a glossary of common terms is provided in Appendix A.1. Due to differences in the development timeline for science, the science technical manual is prepared separately (see Dynamic Learning Maps Consortium, 2022a).

1.1 Current DLM Collaborators for Development and Implementation

The DLM System was initially developed by a consortium of state education agencies (SEAs) beginning in 2010 and expanding over the years, with a focus on ELA and mathematics. The development of a DLM science assessment began with a subset of the participating SEAs in 2014. Due to the differences in the development timelines, separate technical manuals are prepared for ELA and mathematics and science. During the 2021–2022 academic year, DLM assessments were available to students in 21 states: Alaska, Arkansas, Colorado, Delaware, District of Columbia, Illinois, Iowa, Kansas, Maryland, Missouri, New Hampshire, New Jersey, New Mexico, New York, North Dakota, Oklahoma, Pennsylvania, Rhode Island, Utah, West Virginia, and Wisconsin. One SEA partner, Colorado, only administered assessments in ELA and mathematics; one SEA partner, District of Columbia, only administered assessments in science. The DLM Governance Board is comprised of two representatives from the SEAs of each member state. Representatives have expertise in special education and state assessment administration. The DLM Governance Board advises on the administration, maintenance, and enhancement of the DLM System.

In addition to ATLAS and governance board states, other key partners include the Center for Literacy and Disability Studies at the University of North Carolina at Chapel Hill and Agile Technology Solutions at the University of Kansas.

The DLM System is also supported by a Technical Advisory Committee (TAC). DLM TAC members possess decades of expertise, including in large-scale assessments, accessibility for alternate assessments, diagnostic classification modeling, and assessment validation. The DLM TAC provides advice and guidance on technical adequacy of the DLM assessments.

1.2 Student Population

The DLM System serves students with the most significant cognitive disabilities, sometimes referred to as students with extensive support needs, who are eligible to take their state’s alternate assessment based on alternate academic achievement standards. This population is, by nature, diverse in learning style, communication mode, support needs, and demographics.

Students with the most significant cognitive disabilities have a disability or multiple disabilities that significantly impact intellectual functioning and adaptive behavior. When adaptive behaviors are significantly impacted, the individual is unlikely to develop the skills to live independently and function safely in daily life. In other words, significant cognitive disabilities impact students in and out of the classroom and across life domains, not just in academic settings. The DLM System is designed for students with these significant instruction and support needs.

The DLM System provides the opportunity for students with the most significant cognitive disabilities to show what they know, rather than focusing on deficits (Nitsch, 2013). These are students for whom general education assessments, even with accommodations, are not appropriate. These students learn academic content aligned to grade-level content standards, but at reduced depth, breadth, and complexity. The content standards are derived from the Common Core State Standards (CCSS, National Governors Association Center for Best Practices and Council of Chief State School Officers, 2010), often referred to in this manual as college and career readiness standards, and are called Essential Elements (EEs). The EEs are the learning targets for DLM assessments for grades 3–12 in ELA and mathematics. Chapter 2 of this manual provides a complete description of the content structures for the DLM assessment, including the EEs.

While all states provide additional interpretation and guidance to their districts, three general participation guidelines are considered for a student to be eligible for the DLM alternate assessment.

- The student has a significant cognitive disability, as evident from a review of the student records that indicates a disability or multiple disabilities that significantly impact intellectual functioning and adaptive behavior.

- The student is primarily being instructed (or taught) using the DLM EEs as content standards, as evident by the goals and instruction listed in the IEP for this student that are linked to the enrolled grade level DLM EEs and address knowledge and skills that are appropriate and challenging for this student.

- The student requires extensive direct individualized instruction and substantial supports to achieve measurable gains in the grade-and age-appropriate curriculum. The student (a) requires extensive, repeated, individualized instruction and support that is not of a temporary or transient nature and (b) uses substantially adapted materials and individualized methods of accessing information in alternative ways to acquire, maintain, generalize, demonstrate and transfer skills across multiple settings.

The DLM System eligibility criteria also provide guidance on specific considerations that are not acceptable for determining student participation in the alternate assessment:

- a disability category or label

- poor attendance or extended absences

- native language, social, cultural, or economic differences

- expected poor performance on the general education assessment

- receipt of academic or other services

- educational environment or instructional setting

- percent of time receiving special education

- English Language Learner status

- low reading or achievement level

- anticipated disruptive behavior

- impact of student scores on accountability system

- administrator decision

- anticipated emotional duress

- need for accessibility supports (e.g., assistive technology) to participate in assessment

1.3 Assessment

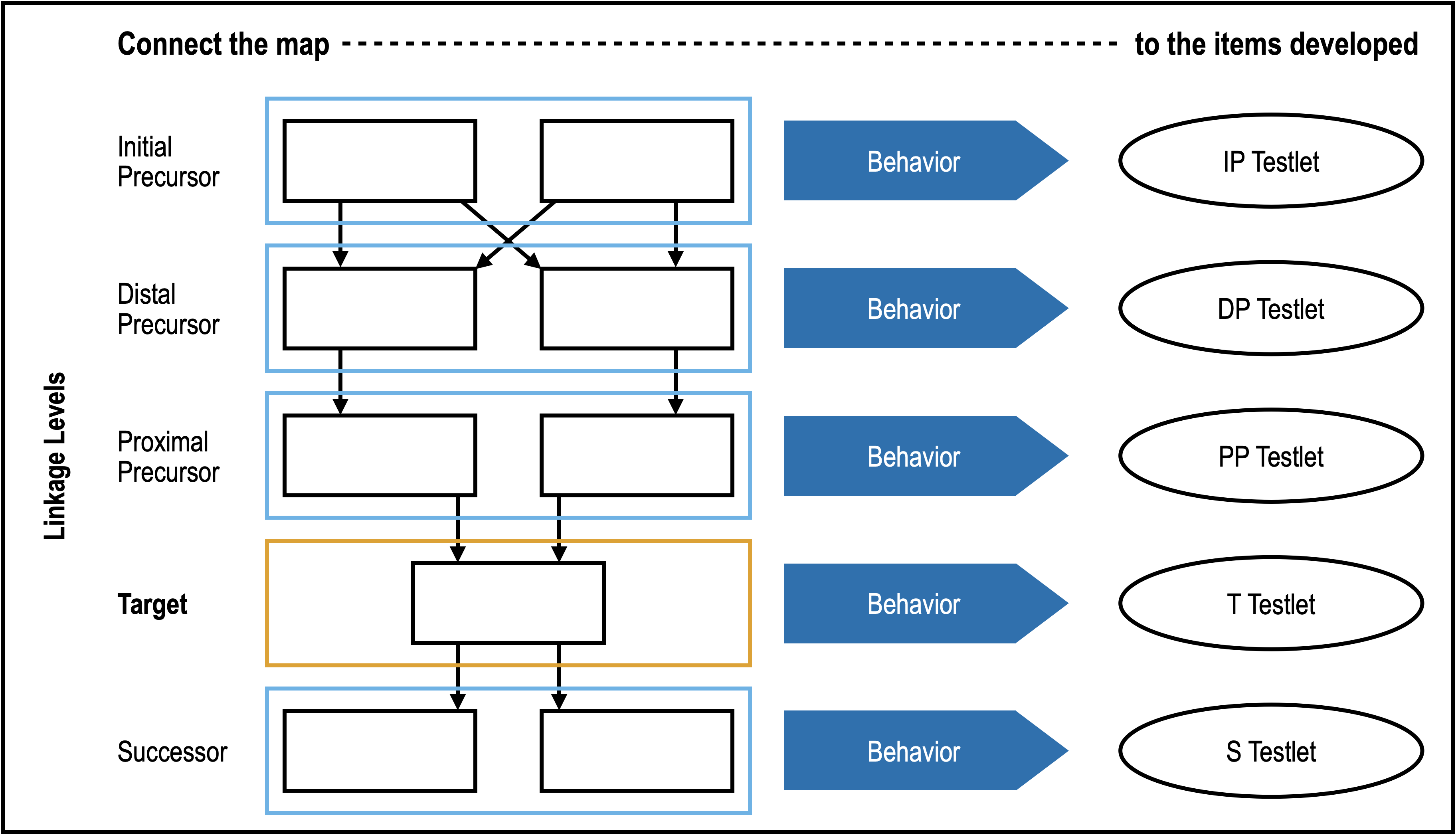

The DLM assessments are delivered as a series of testlets, each of which contains an unscored engagement activity and three to nine items. The assessment items are aligned to specific nodes in the learning map neighborhoods corresponding to each EE. These neighborhoods are illustrations of the connections between the knowledge and skills necessary to meet extended grade-level academic content standards. Individual concepts and skills are represented as points on the map, known as nodes. Small collections of nodes are called linkage levels, which provide access to the EEs at different levels of complexity to meet the needs of all students taking the DLM assessments. Assessment items are written to align to map nodes at one of the five linkage levels and are clustered into testlets (see Figure 1.1; for a complete description of the content structures, including learning map neighborhoods and nodes, and linkage levels, see Chapter 2 of this manual). Except for writing testlets, each testlet measures a single linkage level.

Figure 1.1: Relationship Between DLM Map Nodes and Items in Testlets

Note. Small black boxes represent nodes in the DLM learning map. Blue and orange boxes represent collections of nodes in linkage levels. The orange box denotes the Target linkage level for the EE. There may be more than one node at any linkage level.

Assessment blueprints consist of the EEs, or targeted skills that align most closely with grade-level expectations, prioritized for assessment by the DLM Governance Board. The blueprint specifies the number of EEs that must be assessed in each subject. To achieve blueprint coverage, each student is administered a series of testlets. Testlet delivery and test management are achieved through an online platform, Kite® Suite. Kite Suite contains applications for educators to manage student data and assign testlets to students, and also contains a portal through which students can access testlets. Student results are based on evidence of mastery of the linkage levels for every assessed EE. The student interface used to administer the DLM assessments was designed specifically for students with the most significant cognitive disabilities. It maximizes space available to display content, decreases space devoted to tool-activation buttons within a testing session, and minimizes the cognitive load related to test navigation and response entry. More information about the Kite Suite can be found in Chapter 4 of this manual.

For all aspects of the DLM System, our overarching goal is to align with the latest research from a full range of accessibility lenses (e.g., universal design of assessment, physical and sensory disabilities, special education) to ensure the assessments are accessible for the widest range of students who will be interacting with the content. In order to exhibit the assessed skills, students must be able to interact with the assessment in the means most appropriate for them. Thus, the DLM assessments provide different pathways of student interaction and ensure those means can be used while maintaining the validity of the inferences from and intended uses of the DLM System. These pathways begin in the earliest stages of assessment and content development, from the creation of the learning maps to item writing and assessment administration. We seek both content adherence and accessibility by maximizing the quality of the assessment process while preserving evidence of the targeted cognition. This balance of ensuring accessibility for all students while protecting the validity of the intended uses is discussed throughout this manual where appropriate. Additionally, the overarching goal of accessible content is reflected in the Theory of Action for the DLM System, which is described in the following section.

1.4 Assessment Models

There are two assessment models for the DLM alternate assessment. Each state chooses its own model.

Instructionally Embedded model. There are two instructionally embedded testing windows: fall and spring. The assessment windows are structured so that testlets can be administered at appropriate points in instruction. Each window is approximately 15 weeks long. Educators have some choice of which EEs to assess, within constraints defined by the assessment blueprint. For each EE, the system recommends a linkage level for assessment, and the educator may accept the recommendation or choose another linkage level. Recommendations are based on information collected about the students’ expressive communication and academic skills. At the end of the year, summative results are based on mastery estimates for the assessed linkage levels for each EE (including performance on all testlets from both the fall and spring windows) and are used for accountability purposes. There are different pools of operational testlets for the fall and spring windows. In 2021–2022, the states adopting the Instructionally Embedded model were Arkansas, Delaware, Iowa, Kansas, Missouri, and North Dakota.

Year-End model. During a single operational testing window in the spring, all students take testlets that cover the whole blueprint. The window is approximately 13 weeks long, and test administrators may administer the required testlets throughout the window. Students are assigned their first testlet based on information collected about their expressive communication and academic skills. Each testlet assesses one linkage level and EE. The linkage level for each subsequent testlet varies according to student performance on the previous testlet. Summative assessment results reflect the student’s performance and are used for accountability purposes each school year. In Year-End states, instructionally embedded assessments are available during the school year but are optional and do not count toward summative results. In 2021–2022, the states adopting the Year-End model were Alaska, Colorado, Illinois, Maryland, New Hampshire, New Jersey, New Mexico, New York, Oklahoma, Pennsylvania, Rhode Island, Utah, West Virginia, and Wisconsin.

Information in this manual is common to both models wherever possible and is specific to the Year-End model where appropriate. The Instructionally Embedded model has a separate technical manual.

1.5 Theory of Action and Interpretive Argument

The Theory of Action that guided the design of the DLM System was formulated in 2011, revised in December 2013, and revised again in 2019. It expresses the belief that high expectations for students with the most significant cognitive disabilities, combined with appropriate educational supports and diagnostic tools for educators, results in improved academic experiences and outcomes for students and educators.

The process of articulating the Theory of Action started with identifying critical problems that characterize large-scale assessment of students with the most significant cognitive disabilities so that the DLM System design could alleviate these problems. For example, traditional assessment models treat knowledge as unidimensional and are independent of teaching and learning, yet teaching and learning are multidimensional activities and are central to strong educational systems. Also, traditional assessments focus on standardized methods and do not allow various, non-linear approaches for demonstrating learning even though students learn in various and non-linear ways. In addition, using assessments for accountability pressures educators to use assessments as models for instruction with assessment preparation replacing best-practice instruction. Furthermore, traditional assessment systems often emphasize objectivity and reliability over fairness and validity. Finally, negative, unintended consequences for students must be addressed and eradicated.

The DLM Theory of Action expresses a commitment to provide students with the most significant cognitive disabilities access to highly flexible cognitive and learning pathways and an assessment system that is capable of validly and reliably evaluating their achievement. Ultimately, students will make progress toward higher expectations, educators will make instructional decisions based on data, educators will hold higher expectations of students, and state and district education agencies will use results for monitoring and resource allocation.

The DLM Governance Board adopted an argument-based approach to assessment validation. The validation process began in 2013 by defining with governance board members the policy uses of DLM assessment results. We followed this with a three-tiered approach, which included specification of 1) a Theory of Action defining statements in the validity argument that must be in place to achieve the goals of the system; 2) an interpretive argument defining propositions that must be evaluated to support each statement in the Theory of Action; and 3) validity studies to evaluate each proposition in the interpretive argument.

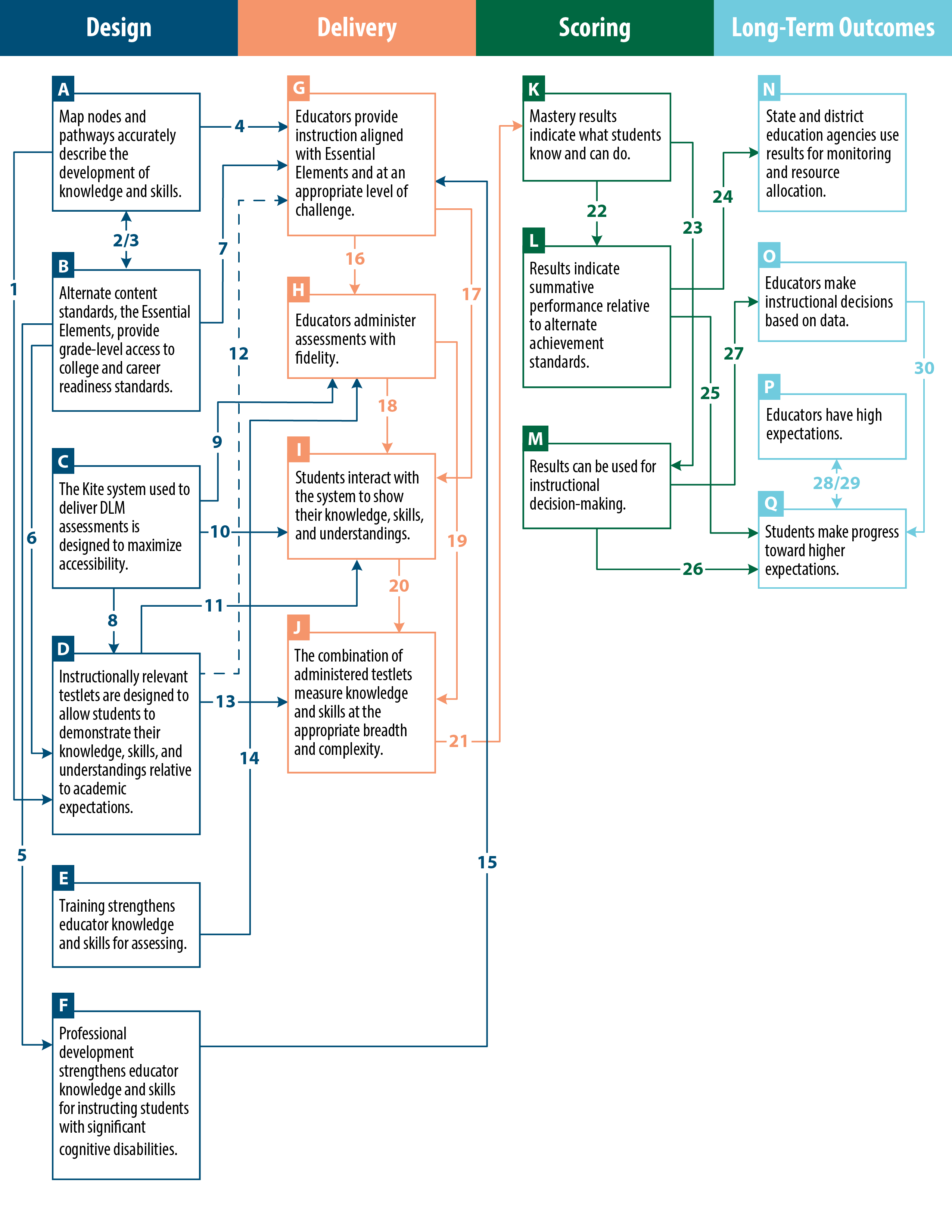

After identifying these overall guiding principles and anticipated outcomes, specific elements of the DLM Theory of Action were articulated to inform assessment design and to highlight the associated validity arguments. The Theory of Action includes the assessment’s intended effects (long-term outcomes), statements related to design, delivery and scoring, and action mechanisms (i.e., connections between the statements; see Figure 1.2). The chain of reasoning in the Theory of Action is demonstrated broadly by the order of the four sections from left to right. Dashed lines represent connections that are present when the optional instructionally embedded assessments are utilized. Design statements serve as inputs to delivery, which informs scoring and reporting, which collectively lead to the long-term outcomes for various stakeholders. The chain of reasoning is made explicit by the numbered arrows between the statements.

Figure 1.2: Dynamic Learning Maps Theory of Action

1.6 Key Features

Consistent with the Theory of Action and interpretive argument, key elements were identified to guide the design and delivery of the DLM System. The following section briefly describes the key features of the DLM System, followed by an overview of chapters indicating where to find evidence for each statement in the Theory of Action. Terms are defined in the glossary (Appendix A.1).

Fine-grained learning maps that describe how students acquire knowledge and skills.

Learning maps are a unique key feature of the DLM System and drive the development of all other components. While the DLM learning maps specify targeted assessment content, they also reflect a synthesis of research on the relationships and learning pathways among different concepts, knowledge, and cognitive processes. Therefore, DLM maps demonstrate multiple ways that students can acquire the knowledge and skills necessary to reach targeted expectations, and they provide a framework that supports inferences about student learning needs (Bechard et al., 2012). The use of DLM maps helps to realize a vision of a cohesive, comprehensive system of assessment. DLM learning map development is described in Chapter 2 of this manual.

A set of learning targets for instruction and assessment, as defined by the EEs and linkage levels, that provide grade-level access to college and career readiness standards.

Crucial to the use of fine-grained learning maps for instruction and test development is the selection of critical nodes that serve as learning targets aligned to the grade level expectations defined in the EEs. These are accompanied by the selection of nodes that build up to and extend the knowledge, skills, and abilities required to achieve the EEs for each grade and subject (i.e., linkage levels). This neighborhood of nodes forms a local EE-specific learning progression that is informative to both instruction and assessment. The development of EEs and the selection of nodes for assessment at each linkage level are described in Chapter 2 of this manual.

Instructionally relevant assessments that reinforce the primacy of instruction and are designed to allow students to demonstrate their knowledge, skills, and understandings relative to academic expectations.

The development of an instructionally relevant assessment begins by creating items using principles of evidence-centered design and Universal Design for Learning and linking related items together into meaningful groups, called testlets. These assessments necessarily take different forms depending on the learning characteristics of students and the concepts being taught. Item and testlet design are described in Chapter 3 of this manual.

Accessibility by design.

Accessibility is a prerequisite to validity, the degree to which interpretation of test results is justifiable for a particular purpose and supported by evidence and theory (American Educational Research Association et al., 2014). Therefore, throughout all phases of development, the DLM System was designed with accessibility in mind to support both learning and assessment. Students must understand what is being asked in an item or task and have the tools to respond in order to demonstrate what they know and can do (Karvonen, Bechard, et al., 2015). The DLM alternate assessment provides accessible content, accessible delivery via technology and student-specific linkage level assignment. Since all students taking an alternate assessment based on alternate academic achievement standards are students with significant cognitive disabilities, accessibility supports are universally available. The emphasis is on helping educators select the appropriate accessibility features and tools for each individual student. Accessibility considerations are described in Chapter 3 (testlet development) and Chapter 4 (accessibility during test administration) of this manual.

Training and professional development that strengthens educator knowledge and skills for instructing and assessing students with significant cognitive disabilities.

The DLM System provides comprehensive support and training to state education agency staff and local educators in order to administer the assessment with fidelity. The DLM System also supports educators with professional development modules which address instruction in ELA and mathematics and support educators in creating Individual Education Programs that are aligned with the DLM EEs. Modules are designed to meet the needs of all educators, especially those in rural and remote areas, offering educators just-in-time, on-demand training. DLM professional development modules support educator continuing education through learning objectives designed to deepen knowledge and skills in instruction and assessment for students with the most significant cognitive disabilities. Training and professional development are described in Chapter 9 of this manual.

Assessment results that are readily actionable.

Due to the unique characteristics of a map-based system, DLM assessments require new approaches to psychometric analysis and modeling, with the goal of assuring accurate inferences about student performance relative to the content as it is organized in the DLM learning maps. Each EE has related nodes at five associated levels of complexity (i.e., linkage levels). Diagnostic classification modeling is used to estimate a student’s likelihood of mastery for each assessed EE and linkage level. A student’s overall performance level in the subject is determined by aggregating linkage level mastery information across EEs. The DLM modeling approach is described in Chapter 5, information about standard setting is described in Chapter 6, and the technical quality of the assessment and student performance is summarized in Chapter 7 of this manual.

The DLM scoring model supports reports that can be immediately used to guide instruction and describe levels of mastery. Student reports include two parts: a performance profile and a learning profile. The performance profile aggregates linkage level mastery information for reporting on each conceptual area and for the subject overall. The learning profile provides fine-grained skill mastery for each assessed EE and linkage level and is designed to support educators in making individualized instructional decisions. Score report design is described in Chapter 7 of this manual.

1.7 Technical Manual Overview

This manual provides evidence to support the assertion of technical quality and the validity of intended uses of the assessment based on statements in the Theory of Action.

Chapter 1 provides the theoretical underpinnings of the DLM System, including a description of the DLM collaborators, the target student population, an overview of the assessment, and an introduction to the Theory of Action and interpretive argument.

Chapter 2 describes the content structures of the DLM System and addresses the design statements in the Theory of Action that the map nodes and pathways accurately describe the development of knowledge and skills, and that the alternate content standards (i.e., the EEs) provide grade level access to college and career readiness standards. The chapter describes how EEs were used to build bridges from grade-level college and career readiness content standards to academic expectations for students with the most significant cognitive disabilities. Chapter 2 also describes how the EEs were developed, the process by which the DLM learning maps were developed, and how the learning maps were linked to the EEs. Extensive, detailed work was necessary to create the DLM learning maps in light of the CCSS and the needs of the student population. Based on in-depth literature reviews and research as well as extensive input from experts and practitioners, the DLM learning maps are the conceptual and content basis for the DLM System. The chapter describes how the learning maps are organized by claims and conceptual areas, and the relationship between elements in the DLM System. Chapter 2 then describes the expert evaluation of the learning map structure, the development of the assessment blueprints and subsequent blueprint revision, and the external alignment study for the DLM System. The chapter concludes with a description of the learning maps that are used for the operational assessment.

Chapter 3 outlines procedural evidence related to test content and item quality, addressing the Theory of Action’s statement that instructionally relevant testlets are designed to allow students to demonstrate their knowledge, skills, and understandings relative to academic expectations. The chapter relates how evidence-centered design was used to develop testlets—the basic unit of test delivery for the DLM alternate assessment. Further, the chapter describes how nodes in the DLM learning maps and EEs were used to develop concept maps to specify item and testlet development. Using principles of Universal Design, the entire development process accounted for the student population’s characteristics, including accessibility and bias considerations. Chapter 3 includes summaries of external reviews for content, bias, and accessibility, and field tests. The final portion of the chapter describes results of differential item functioning (DIF) analyses ensuring that testlets are fair for all student sub-groups.

Chapter 4 provides an overview of the fundamental design elements that characterize test administration and how each element supports the DLM Theory of Action, specifically the statement that the system used to deliver DLM assessments is designed to maximize accessibility. The chapter describes the assessment delivery system (Kite Suite) and assessment modes (computer delivery and educator delivery). The chapter also relates how students are assigned testlets and describes the test administration process, resources that support test administrators, and test security. Chapter 4 also describes evidence related to each of the delivery statements in the Theory of Action: the combination of administered testlets measure knowledge and skills at the appropriate breadth, depth, and complexity; educators provide instruction aligned with EEs and at an appropriate level of challenge; educators administer assessments with fidelity; and students interact with the system to show their knowledge, skills, and understandings.

Chapter 5 addresses the Theory of Action’s scoring statement that mastery results indicate what students know and can do. The chapter demonstrates how the DLM project draws upon a well-established research base in cognition and learning theory and uses operational psychometric methods that are relatively uncommon in large-scale assessments to provide feedback about student progress and learning acquisition. This chapter describes the psychometric model that underlies the DLM project and describes the process used to estimate item and student parameters from student test data and evaluate model fit.

Chapter 6 addresses the scoring statement that results indicate summative performance relative to alternate achievement standards. This chapter describes the methods, preparations, procedures, and results of the standard setting meeting and the follow-up evaluation of the impact data and cut points based on the 2014–2015 standard setting study, as well as the standards adjustment process that occurred in spring 2022. This chapter also describes the process of developing grade- and subject-specific performance level descriptors in ELA and mathematics.

Chapter 7 reports the 2021–2022 operational results. The chapter first reports student participation data, and then details the percent of students achieving at each performance level, as well as subgroup performance by gender, race, ethnicity, and English learner status. The chapter describes scoring rules used to determine linkage level mastery and the percent of students who showed mastery at each linkage level. The chapter next describes the inter-rater reliability of writing sample ratings. The chapter also presents evidence related to postsecondary opportunities for students taking DLM assessments. Finally, the chapter addresses the statement that results can be used for instructional decision-making with a description of data files, score reports, and interpretive guidance.

Chapter 8 focuses on reliability evidence, including a description of the methods used to evaluate assessment reliability and a summary of results by the linkage level, EE, conceptual area, and subject (overall performance). This evidence is used to support all three scoring statements in the Theory of Action.

Chapter 9 addresses the Theory of Action’s statements that training strengthens educator knowledge and skills for assessing; and professional development strengthens educator knowledge and skills for instructing and assessing students with significant cognitive disabilities. The chapter describes the training and professional development that was offered across states administering DLM assessments, including the 2021–2022 training for state and local education agency staff, the required test administrator training, and the professional development available to support instruction. Participation rates and evaluation results from 2021–2022 instructional professional development are included.

Chapter 10 synthesizes the evidence provided in the previous chapters. It evaluates how the evidence supports the statements in the Theory of Action as well as the long-term outcomes: students make progress toward higher expectations, educators make instructional decisions based on data, educators have high expectations, and state and district education agencies use results for monitoring and resource allocation. The chapter ends with a description of our future research and ongoing initiatives for continuous improvement.